“Explain deadlocks and how to prevent them.”

If this question makes you uneasy, you’re not alone. Interviewing for a technical role, especially one involving operating systems, can feel overwhelming. But what if you could walk into your next interview prepared , confident, and ready to tackle even the trickiest questions?

If you’re getting ready for an interview in 2025, it’s crucial to have a solid understanding of operating systems. This ultimate guide to Operating Systems Interview Questions will break down concepts, provide examples, and share strategies to nail those tech interviews. Whether you are a seasoned professional brushing up on your skills, this blog will arm you with the knowledge to impress your interviewer.

What is an Operating System (OS)? What Does It Do?

An Operating System (OS) is the software that acts like the middleman between you and the hardware of a computer. Think of it as the system that helps your computer run smoothly, letting you interact with the machine without needing to understand its inner workings.

What Does It Do?

- Manages Tasks:

The Operating System (OS) keeps track of all the applications and programs running on your computer. For example, when you open a browser and play music simultaneously, the OS ensures both tasks run smoothly without interfering with each other. - Handles Memory:

It allocates memory (RAM) to various programs, ensuring that each gets enough to function properly. If you have limited RAM, the Operating System (OS) decides which tasks need priority, preventing crashes. - Organizes Files:

It provides a structured way to store, retrieve, and manage your data. Think of it as a filing cabinet, where each file is stored systematically for easy access. - Connects Devices:

The Operating System (OS) makes sure peripherals like printers, keyboards, and external drives connect seamlessly. For example, when you plug in a USB device, the OS detects and helps you use it. - Keeps Things Secure:

It acts as a shield, protecting your system from unauthorized access and potential threats like malware. Passwords, encryption, and user permissions are all managed by the Operating System (OS). - Provides a User Interface (UI):

This is how you interact with the computer. The Operating System (OS) offers two main types of UIs:- Graphical User Interface (GUI): You use icons, menus, and windows (e.g., macOS, Windows).

- Command Line Interface (CLI): You type commands to perform actions (e.g., Linux terminal).

Operative System Interview Questions for Freshers – OS Basics

What is IPC (Inter-Process Communication)? How Does It Work?

IPC is a way for different programs (or processes) to talk to each other and share information. Imagine it like passing notes in a classroom, but digitally.

Ways Processes Communicate (Mechanisms):

- Pipes: Think of this as a one-way street where one process writes data, and another reads it.

- Message Queues: Similar to a mailbox where processes can drop and pick up messages.

- Shared Memory: Like a bulletin board where processes can write and read together.

- Semaphores: Tools that help processes take turns without clashing.

- Sockets: Used for communication over the internet.

2. Why Do We Need an OS? What Are the Types?

It’s like the brain of your computer, ensuring everything runs smoothly. Without it, your hardware and software wouldn’t work together.

Different Types of Operating System (OS):

- Batch OS: Executes batches of tasks without user interaction.

- Time-Sharing OS: Lets multiple users share computer time.

- Distributed OS: Connects multiple computers to work as one.

- Real-Time OS (RTOS): Handles tasks that need instant responses.

- Network OS: Designed for managing network resources.

- Mobile OS: Runs on smartphones and tablets.

3. What Are Popular Operating Systems?

- Windows (e.g., Windows 10, Windows 11)

- macOS

- Linux (e.g., Ubuntu, Fedora)

- Android

- iOS

- Chrome OS

4. Why Use Multiple Processors in a System?

Using more than one processor makes a computer:

- Faster: More tasks are done at the same time.

- Reliable: If one processor fails, others keep working.

- Scalable: You can add more processors as needed.

- Cost-Effective: Shared resources save money.

5. What is RAID, and Why Is It Useful?

RAID (Redundant Array of Independent Disks) is a method to store data more securely and quickly using multiple hard drives.

Types of RAID:

- RAID 0: Splits data for faster performance but no backup.

- RAID 1: Copies data for safety.

- RAID 5 & 6: Balances speed and security.

- RAID 10: Combines the best of RAID 0 and RAID 1.

6. What is a GUI?

A Graphical User Interface (GUI) lets you interact with a computer using icons, buttons, and menus instead of typing commands. It’s what makes computers user-friendly.

7. What is a Pipe, and When is It Used?

A Pipe is like a one-way road for data between programs. For example, in Linux, you might use a pipe to send the output of one command directly into another.

8. What Do Semaphores Do?

Semaphores are like traffic signals for processes, ensuring they don’t all use the same resource at the same time.

9. What is a Bootstrap Program?

This is the first program your computer runs when it starts. It loads the operating system into memory so you can start using the computer.

10. What is Demand Paging?

Demand Paging only loads parts of a program into memory when needed. This saves memory and speeds up processes.

11. What is the Definition of RTOS?

A Real-Time Operating System (F) is an Operating System (OS) designed to process tasks within a strict time frame. It’s commonly used in systems requiring high reliability and quick response times, like medical devices, robots, or automotive systems.

12. What is Process Synchronization?

This ensures multiple programs or processes don’t mess up shared data while working together.

13. How is Main Memory Different from Secondary Memory?

- Main Memory (RAM): Temporary, faster, and used while the computer is running.

- Secondary Memory (Hard Drive/SSD): Permanent, slower, and used to store data long-term.

14. What are Overlays?

Overlays are a way to run larger programs on computers with limited memory by loading only the parts of the program needed at a time. Instead of loading an entire program into memory, only the needed parts (overlays) are loaded at a time, saving memory while executing large programs on systems with limited resources.

15. What Are the Top 10 Operating Systems?

- Windows 10

- Windows 11

- macOS Ventura

- Ubuntu

- Fedora

- Android

- iOS

- Chrome OS

- Solaris

- Debian

16. What Causes Deadlocks?

Deadlocks happen when:

- One process keeps a resource and waits for another.

- Processes don’t give up resources.

- Resources can’t be forcibly taken away.

- Processes form a circular chain of waiting.

17. How Are Server Systems Classified?

Server systems are categorized based on their primary functions:

- File Servers: Manage and share file storage.

- Database Servers: Handle database queries and management.

- Web Servers: Serve web pages and host websites.

- Application Servers: Run specific applications or services for client use.

- Proxy Servers: Act as intermediaries between users and the internet, enhancing security and performance.

18. What Are Different Thread Models?

- Many-to-One Model: Multiple user threads share one kernel thread. Simple but limits performance.

- One-to-One Model: Each user thread gets its own kernel thread. Great performance but uses more resources.

19. What is Worst-Fit and Best-Fit Memory Allocation?

- Worst-Fit: Assigns the largest available block of memory. Leaves big gaps for later use.

- Best-Fit: Assigns the smallest block that fits, reducing wasted space.

20. What is Virtual Memory in an Operating System?

Virtual memory is a technique used by the operating system to extend the computer’s physical memory (RAM) by using a portion of the storage drive as temporary memory. It allows the system to handle larger workloads or run multiple applications by moving less-used data to a reserved space on the hard drive or SSD, called the paging file.

Operative System Interview Questions for Intermediate

Let’s break down these operating system concepts in a human-friendly manner to help you explain them clearly in interviews:

1. What is Thrashing in OS?

Thrashing occurs when the system spends the majority of its time swapping data between RAM and disk (paging), instead of executing processes. This happens when there isn’t enough physical memory to handle the demands of running processes, causing excessive page faults.

Example for Interview:

- “If multiple processes are competing for memory, and the system keeps swapping data between RAM and disk, it can cause thrashing, leading to performance degradation.”

2. What is the Main Objective of Multiprogramming?

The main goal of multiprogramming is to maximize CPU utilization by running multiple processes concurrently. It allows the CPU to switch between processes so that while one is waiting for I/O operations, another can use the CPU.

Example for Interview:

- “In a multiprogramming system, multiple programs are loaded into memory, and the CPU executes them in a way that keeps the system busy, even if some programs are waiting for input.”

3. What Do You Mean by Asymmetric Clustering?

In asymmetric clustering, one system (the primary node) is actively processing tasks, while the others are on standby. If the primary system fails, one of the standby systems takes over. This setup provides high availability and fault tolerance.

Example for Interview:

- “Asymmetric clustering is like having a backup system ready to take over only if the primary system fails, which ensures continuous availability.”

4. What is the Difference Between Multitasking and Multiprocessing OS?

- Multitasking refers to an operating system’s ability to execute multiple tasks or processes at the same time, by quickly switching between them (time-sharing).

- Multiprocessing involves the use of multiple processors (or cores) to execute multiple processes simultaneously.

Example for Interview:

- “In multitasking, a single CPU handles multiple tasks by switching between them rapidly, while in multiprocessing, multiple CPUs handle tasks simultaneously.”

5. What Do You Mean by Sockets in OS?

A socket is an endpoint for communication between two machines or processes. Sockets allow data to be sent and received over a network. They are commonly used in client-server models for network communication.

Example for Interview:

- “A socket enables communication between a web server and a browser, where the server sends the data and the browser receives it using a socket.”

6. Explain the Zombie Process?

A zombie process is a process that has completed execution, but still has an entry in the process table. This happens because its parent process hasn’t yet read its exit status. The process is considered “dead” but hasn’t been fully cleaned up by the OS.

Example for Interview:

- “Imagine a child process that has finished, but its parent hasn’t acknowledged it, so it remains in the process table as a zombie.”

7. What Do You Mean by Cascading Termination?

Cascading termination happens when a process terminates, and as a result, all its child processes are also terminated. This helps prevent orphaned or unnecessary processes from running.

Example for Interview:

- “If a parent process ends and all its children are also terminated automatically, this is cascading termination, which helps in proper resource management.”

8. What Is Starvation and Aging in OS?

- Starvation occurs when a low-priority process is perpetually delayed because higher-priority processes keep getting resources first.

- Aging is a technique to prevent starvation by gradually increasing the priority of processes that have been waiting for a long time.

Example for Interview:

- “In a system using priority scheduling, a low-priority task might starve, but aging ensures that it eventually gets CPU time by boosting its priority.”

9. What Is the Difference Between Paging and Segmentation?

- Paging divides memory into fixed-size blocks called pages. It helps in managing memory efficiently and avoids fragmentation but can cause internal fragmentation.

- Segmentation divides memory into logical segments based on the process’s structure (e.g., code, data, stack), making it easier to manage, but can lead to external fragmentation.

Example for Interview:

- “Paging is like dividing a book into pages, while segmentation is like dividing it into chapters, each with a specific purpose.”

10. What Is a Real-Time System?

A real-time system is designed to process data and provide results within a specific time constraint. These systems are used where timely responses are crucial, such as in medical devices or flight control systems.

Example for Interview:

- “In a real-time operating system, tasks must be completed within a guaranteed timeframe, like controlling the brakes of a car in an anti-lock braking system.”

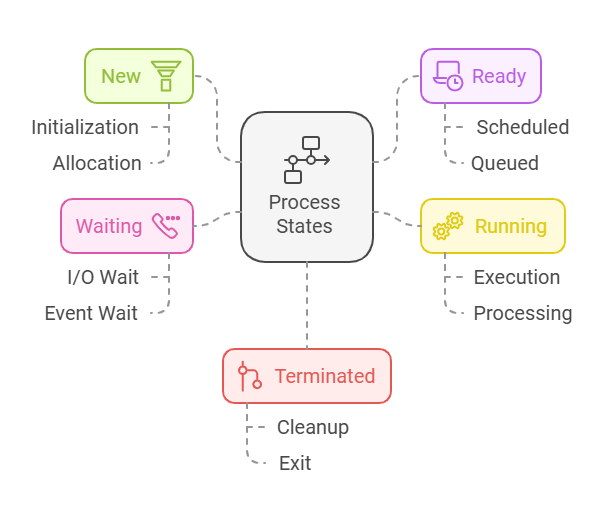

11. What Are the Different States of a Process?

The process can be in several states during its life cycle:

- New: The process is being created.

- Ready: The process is waiting for CPU time.

- Running: The process is executing.

- Waiting: The process is waiting for an event (like I/O).

- Terminated: The process has finished execution.

Example for Interview:

- “A process moves between these states, much like a task moving through different stages in a project.”

12. What Do You Mean by FCFS?

FCFS (First-Come, First-Served) is a scheduling algorithm where the process that arrives first gets executed first. It is simple but can lead to poor performance if short processes have to wait behind long ones (convoy effect).

Example for Interview:

- “In a queue, the first person to arrive gets served first, just like FCFS scheduling where the first process to arrive gets CPU time first.”

13. What Is Reentrancy?

Reentrancy refers to the ability of a function to be interrupted in the middle of execution and safely called again before the previous executions are complete. Reentrant functions do not maintain static or global state.

Example for Interview:

- “A reentrant function in a multithreading environment can be safely called by multiple threads simultaneously without causing issues.”

14. What Is a Scheduling Algorithm? Name Different Types of Scheduling Algorithms.

A scheduling algorithm determines which process or thread the CPU should execute next. Common algorithms include:

- FCFS: Processes are executed in the order they arrive.

- Round Robin: Each process gets an equal time slice.

- Priority Scheduling: Processes with higher priority are executed first.

- Shortest Job First (SJF): The process with the shortest execution time is scheduled first.

Example for Interview:

- “Scheduling algorithms help determine how processes are allocated CPU time in a way that maximizes efficiency or fairness.”

15. What Is the Concept of Demand Paging?

Demand paging is a memory management technique where pages are loaded into memory only when they are needed, not when a program starts. This saves memory and can improve system performance.

Example for Interview:

- “If a program needs a specific data page that isn’t in memory, the OS will fetch it from disk when required, instead of loading everything upfront.”

16. When Does Thrashing Occur?

Thrashing occurs when the system spends more time swapping data between RAM and disk than executing processes. This typically happens when there is too little memory for the running processes.

Example for Interview:

- “Thrashing is like trying to juggle too many tasks at once; instead of completing tasks, you end up wasting time constantly switching between them.”

17. What Is a Batch Operating System?

A batch operating system processes jobs in batches without user interaction. Jobs are grouped together and processed sequentially.

Example for Interview:

- “In a batch system, you don’t interact with the computer while it processes your job; instead, you submit a batch of jobs, and they are executed one after the other.”

18. Do the Batch Operating System Interact with Computers for Processing the Needs of Jobs or Processes?

No, batch operating systems do not require user interaction once a job is submitted. They process tasks automatically without waiting for user input, making them efficient for repetitive tasks.

19. What Is a Process Control Block (PCB)?

A Process Control Block (PCB) is a data structure in the OS that stores all information about a process, such as its state, program counter, memory allocation, and CPU registers.

Example for Interview:

- “The PCB is essential for context switching. It allows the OS to save the state of a process when switching between processes.”

20. What Are the Files Used in the Process Control Block?

The PCB may include information like:

- Process ID (PID)

- Program Counter

- CPU Registers

- Memory Management Information

- I/O Status Information

21. What Are the Issues Related to Concurrency?

Concurrency refers to multiple processes or threads executing simultaneously, which can lead to several issues:

- Race Conditions: When two or more threads/processes access shared resources and attempt to modify them at the same time, causing inconsistent or incorrect results.

- Deadlock: When two or more processes are waiting for each other to release resources, causing a standstill where no process can proceed.

- Starvation: When a process is indefinitely delayed because other processes keep acquiring resources or CPU time before it.

- Synchronization: Ensuring that multiple processes or threads operate in a controlled manner without conflicts.

Example for Interview:

- “In a banking system, two users might try to withdraw money from the same account at the same time. Without proper synchronization, the account balance might go negative, leading to inconsistencies.”

22. What Are the Drawbacks of Concurrency?

- Complexity: Writing concurrent programs is more complicated because developers need to ensure correct synchronization and avoid issues like deadlocks or race conditions.

- Overhead: Managing multiple threads or processes adds extra overhead, as the system must switch contexts, synchronize resources, and manage concurrency.

- Debugging Difficulty: Debugging concurrent programs is harder because issues may only arise in certain conditions (like specific timing or thread interleavings), making them intermittent and difficult to reproduce.

Example for Interview:

- “While concurrency improves performance in many scenarios, it can make debugging harder because problems like race conditions only occur under certain timing, making them elusive.”

23. How to Calculate Performance in Virtual Memory?

Performance in virtual memory is often measured using metrics such as:

- Page Fault Rate: The frequency with which a program accesses data that is not currently in physical memory (causing a page fault).

- Page Fault Service Time: The time it takes to handle a page fault, which involves swapping data from disk to memory.

- Effective Memory Access Time (EMAT): This combines both the time it takes to access data in memory and the time it takes to handle page faults.

- The formula for EMAT is:

EMAT=(1−p)×Memory Access Time+p×Page Fault Time

where p is the page fault rate.

- The formula for EMAT is:

Example for Interview:

- “The page fault rate directly impacts performance because frequent page faults lead to more disk I/O, which is much slower than memory access.”

24. What Is Rotational Latency?

Rotational latency refers to the time it takes for the desired disk sector to rotate under the read/write head of a hard drive. This time depends on the disk’s rotational speed (measured in RPM, rotations per minute).

Example for Interview:

- “If you’re accessing a file that’s located on a specific sector of the disk, rotational latency is the time the disk takes to rotate until that sector is positioned correctly under the read/write head.”

25. What Is the Purpose of Having Redundant Data?

Redundant data is used to ensure reliability and availability. If one copy of data is lost or corrupted, the system can rely on a backup copy to restore the data, minimizing downtime and data loss.

- Fault Tolerance: Systems like RAID use redundant data across multiple disks for fault tolerance.

- Data Recovery: Redundant data allows for easier and quicker recovery in case of failures.

Example for Interview:

- “In cloud storage, data is often duplicated across multiple servers to ensure that if one server fails, the data is still accessible from another server.”

26. How Are One-Time Passwords Implemented?

One-Time Passwords (OTPs) are temporary passwords that are valid for only a single login session or transaction. OTPs are typically implemented using:

- Time-based: The password changes periodically (e.g., every 30 seconds) based on a secret key and the current time.

- Challenge-Response: A system generates a random password, sends it to the user, and the user inputs it back to verify identity.

Example for Interview:

- “Online banking often uses OTPs for extra security. The user enters their username and password, and then an OTP is sent to their phone, which they must enter to complete the login.”

27. Define the Term Bounded Waiting

Bounded waiting is a condition where each process in a system is guaranteed to wait for no more than a specified number of times before acquiring a resource or getting a chance to execute.

Example for Interview:

- “In an operating system with bounded waiting, if a process has to wait for a resource, it ensures that it won’t have to wait indefinitely, ensuring fairness.”

28. What Do Orphan Processes Refer To?

Orphan processes are processes that continue to run even after their parent process has been terminated. The operating system assigns an orphan process a new parent process to handle its termination.

Example for Interview:

- “If a parent process crashes without terminating its child processes, those child processes become orphan processes. The OS reassigns them to a default parent process.”

29. What Is a Trap and a Trapdoor?

- Trap: A trap is an exception or an event that interrupts the normal flow of execution to handle some error or special condition, like a system call or an illegal instruction.

- Trapdoor: A trapdoor refers to a hidden method used to bypass normal security mechanisms. It’s often used maliciously to gain unauthorized access.

Example for Interview:

- “A trap can be a software interrupt, such as when a program requests a service from the OS. A trapdoor is like a backdoor in a system that allows an attacker to bypass security measures.”

30. What Is the Function of Compaction Within an Operating System?

Compaction is a memory management technique used to reduce fragmentation. It involves moving processes in memory so that free memory is consolidated into a larger, contiguous block.

Example for Interview:

- “When a system experiences fragmentation, compaction helps to rearrange memory to create larger free blocks, making it easier to allocate memory for new processes.”

Operative System Interview Questions for Experienced – Advance Level

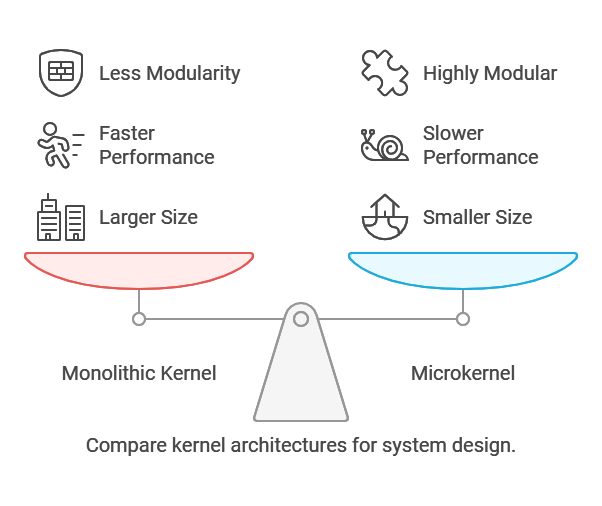

1. What Are the Different Types of Kernel?

There are several types of kernels, with the main ones being:

- Monolithic Kernel: A single large kernel that contains all the essential services (e.g., process management, file management) in one codebase.

- Microkernel: A minimalistic kernel that only provides essential services like communication between hardware and software, while other services are handled by user-space programs.

- Hybrid Kernel: A combination of monolithic and microkernel, offering flexibility by allowing some services to run in user space and others in kernel space.

- Exokernel: Focuses on providing minimal abstractions and gives more control to user applications.

- Nanokernel: A minimalistic kernel providing basic hardware abstractions.

Example for Interview:

- “Monolithic kernels are large and self-contained, while microkernels are more modular and lightweight, aiming for greater system flexibility.”

2. What Do You Mean by Semaphore in OS? Why Is It Used?

A semaphore is a synchronization tool used to manage access to shared resources in a concurrent system, avoiding race conditions. It consists of a variable or abstract data type that is used to control access by multiple processes in a multitasking environment.

- Counting Semaphore: Used for managing a limited number of resources.

- Binary Semaphore: Similar to a lock (binary flag), indicating whether a resource is available or not.

Why It’s Used: Semaphores prevent conflicts and ensure data integrity when multiple processes access shared resources.

Example for Interview:

- “Semaphores help avoid the issue of race conditions in a system, ensuring that one process doesn’t interrupt another while accessing shared resources.”

3. What Is Kernel and Write Its Main Functions?

The kernel is the core component of an operating system. It manages system resources and communication between hardware and software. It operates in privileged mode (kernel mode) to ensure efficient and secure access to system resources.

Main Functions of the Kernel:

- Process Management: Controls processes and their execution.

- Memory Management: Handles memory allocation and deallocation.

- Device Management: Manages input/output devices.

- System Calls: Provides an interface for user applications to request services from the operating system.

- File Management: Manages file systems and file access.

Example for Interview:

- “The kernel is the brain of the operating system (OS), handling tasks like process scheduling, memory management, and device I/O.”

4. Write the Difference Between Microkernel and Monolithic Kernel?

- Monolithic Kernel: A single large kernel that provides all the operating system services, such as memory management, device drivers, file management, and system calls. It is fast but complex.

- Microkernel: A small kernel that provides only essential services (e.g., communication between hardware and software), while other functionalities (e.g., device drivers, file systems) are handled in user space.

Differences:

| Feature | Monolithic Kernel | Microkernel |

| Size | Larger | Smaller |

| Performance | Faster (less overhead) | Slower (more context switches) |

| Modularity | Less modular | Highly modular |

| Reliability | Less reliable (single failure can crash the whole OS) | More reliable (isolates failures) |

Example for Interview:

- “Microkernels are more modular and fault-tolerant, while monolithic kernels are fast but harder to maintain due to their size.”

5. What Is SMP (Symmetric Multiprocessing)?

SMP is a system where multiple processors share a common memory space and work independently but equally to execute tasks. All processors have equal access to the memory and I/O devices.

Example for Interview:

- “In SMP systems, all processors work in parallel, and there is no master-slave relationship among them, allowing for improved performance and fault tolerance.”

6. What Is a Time-Sharing System?

A time-sharing system allows multiple users to access a computer system simultaneously by rapidly switching between them. Each user gets a small time slice of CPU time, making it appear as if they have exclusive use of the system.

Example for Interview:

- “In a time-sharing system, the CPU switches between users so quickly that they feel like they are the only ones using the computer.”

7. What Is Context Switching?

Context switching refers to the process of saving the state of a running process so that it can be resumed later, and loading the state of another process to execute it. This allows multiple processes to share the CPU.

Example for Interview:

- “Context switching is like pausing one task and picking up another, ensuring that the system can manage multiple tasks concurrently.”

8. What Is the Difference Between Kernel and Operating System?

- Kernel: The kernel is the core part of the operating system that directly interacts with hardware and manages low-level functions like process scheduling, memory management, and device management.

- Operating System (OS): An OS (operating system) includes the kernel but also provides additional functionalities like user interfaces, application programs, and utilities.

Example for Interview:

- “The kernel is the essential part of the operating system that controls hardware, while the operating system (OS) as a whole includes the kernel and other tools to support user interaction.”

9. What Is the Difference Between Process and Thread?

- Process: A process is an independent program in execution with its own memory space and resources. It is the unit of execution.

- Thread: A thread is a smaller unit of a process that shares memory and resources with other threads of the same process.

Differences:

| Feature | Process | Thread |

| Memory | Has its own memory space | Shares memory with other threads |

| Execution | Independent execution | Shares CPU with other threads |

| Overhead | Higher overhead (due to separate memory) | Lower overhead (shares resources) |

Example for Interview:

- “A process is a complete program in execution, while a thread is a smaller execution unit within a process.”

10. What Are the Various Sections of a Process?

A process consists of several sections:

- Code Section: Contains the executable code.

- Data Section: Holds global variables and constants.

- Heap: Used for dynamic memory allocation.

- Stack: Used for function calls, local variables, and managing execution flow.

- Process Control Block (PCB): Stores information about the process such as its state, program counter, and scheduling information.

11. What Is a Deadlock in OS? What Are the Necessary Conditions for a Deadlock?

A deadlock occurs when two or more processes are unable to proceed because each is waiting for the other to release a resource. The necessary conditions for a deadlock are:

- Mutual Exclusion: At least one resource is held in a non-shareable mode.

- Hold and Wait: A process holding one resource is waiting for others.

- No Preemption: Resources cannot be forcibly taken from processes.

- Circular Wait: A circular chain of processes exists, each waiting for a resource held by the next process.

Example for Interview:

- “In a deadlock, processes are stuck in a waiting state because they are all holding resources and waiting for others.”

12. What Do You Mean by Belady’s Anomaly?

Belady’s anomaly occurs in page replacement algorithms, where increasing the number of page frames results in an increase in the number of page faults, contrary to the expectation that more memory should reduce page faults.

Example for Interview:

- “Belady’s Anomaly shows that adding more memory doesn’t always improve performance in certain page replacement algorithms.”

13. What Is Spooling in OS?

Spooling refers to the process of putting data into a temporary storage area (like a buffer or queue) to be processed later, often used for tasks like printing. It helps in managing tasks that are slow and need to be handled asynchronously.

Example for Interview:

- “Spooling is like putting a file in a queue to be printed later, so the user doesn’t have to wait for the print task to complete before continuing with other tasks.”

14. What is Non-Preemptive Process Scheduling in Operating Systems?

In Non-Preemptive Process Scheduling, once a process starts executing, it continues until it completes or voluntarily yields the CPU (e.g., through I/O operations or explicitly giving up control). The operating system (OS) doesn’t forcibly interrupt or preempt a running process to give the CPU to another process.

Example for Interview:

- “In non-preemptive scheduling, the process must finish executing before another can take over, which is useful for ensuring that tasks are completed without being interrupted.”

15. What is the use of Dispatchers in Operating Systems?

The dispatcher is a component of the operating system responsible for selecting and switching between processes in the ready queue to give the CPU to the chosen process. It performs the context switching, saving the state of the current process and loading the state of the new process.

Example for Interview:

- “The dispatcher is like the traffic controller, deciding which process gets CPU time and ensuring that the context switch occurs smoothly.”

16. What is the Difference Between Dispatcher and Scheduler?

- Scheduler: The scheduler is responsible for selecting which process to run next from the ready queue, based on a particular scheduling algorithm (e.g., FCFS, Round Robin).

- Dispatcher: Once the scheduler selects a process, the dispatcher takes over, switching the context to the selected process.

Example for Interview:

- “While the scheduler decides which process should run, the dispatcher ensures that the CPU is handed over to that process.”

17. What is Peterson’s Solution?

Peterson’s Solution is a classic algorithm for achieving mutual exclusion in a two-process system. It ensures that two processes do not simultaneously enter their critical sections, even when executed on a multiprocessor system. The algorithm uses two shared variables to indicate the intention of each process and ensures a fair turn to enter the critical section.

Example for Interview:

- “Peterson’s Solution is a software-based way of solving the critical section problem in a two-process environment without using hardware locks.”

18. What are the Classical Problems of Process Synchronization?

There are several classical problems in process synchronization that deal with managing processes accessing shared resources:

- Critical Section Problem: Ensuring that no two processes can execute their critical section at the same time.

- Producer-Consumer Problem: Synchronizing the access to a shared buffer between producer and consumer processes.

- Readers-Writers Problem: Managing access to shared data for processes that either read or write.

- Dining Philosophers Problem: Solving synchronization problems in a situation with limited resources (forks) and multiple processes (philosophers).

Example for Interview:

- “The producer-consumer problem is a classic synchronization issue where one process is producing data and another is consuming it, with both needing access to a shared buffer.”

19. What is a Critical Section Problem?

The Critical Section Problem involves ensuring that no two processes can access a shared resource at the same time. This ensures data consistency and prevents race conditions when multiple processes are running concurrently.

Example for Interview:

- “The critical section problem is about ensuring that a process can enter its critical section and access shared data without interference from other processes.”

20. What are Page Replacement Algorithms in Operating Systems?

Page replacement algorithms determine which memory page should be swapped out when a new page needs to be loaded into memory. Common page replacement algorithms include:

- FIFO (First In, First Out): The oldest page in memory is replaced.

- LRU (Least Recently Used): The page that hasn’t been used for the longest time is replaced.

- Optimal (OPT): The page that will not be used for the longest time in the future is replaced.

Example for Interview:

- “LRU is commonly used because it tends to replace the page that is least likely to be used soon, improving performance.”

21. What is a Translational Lookaside Buffer (TLB)?

A TLB is a small, fast cache that stores recent translations of virtual addresses to physical addresses. It speeds up memory access by avoiding the need to repeatedly search the page table for address translation.

Example for Interview:

- “A TLB helps avoid the overhead of going through the full page table, significantly improving the system’s memory access speed.”

22. What is Address Translation in Paging?

In paging, address translation refers to converting a virtual address into a physical address. The system uses a page table to map virtual pages to physical memory locations.

Example for Interview:

- “Address translation in paging allows the operating system (OS) to handle memory efficiently by breaking large memory blocks into smaller, fixed-size pages.”

23. What is Round Robin CPU Scheduling Algorithm?

The Round Robin (RR) scheduling algorithm assigns each process a fixed time slice (quantum). After each time slice, the process is preempted and placed at the end of the ready queue, and the next process gets a turn. This continues until all processes complete.

Example for Interview:

- “Round Robin is fair because each process gets an equal share of the CPU, but the performance may degrade if the time slice is too small.”

24. What are Monitors in the Context of Operating Systems?

A monitor is a synchronization construct that allows safe sharing of resources between processes. It provides a high-level abstraction for managing mutual exclusion and synchronization. It includes condition variables for waiting and signaling.

Example for Interview:

- “Monitors provide a safer, higher-level way of synchronizing processes compared to semaphores, as they automatically handle mutual exclusion.”

25. What Do You Understand by Belady’s Anomaly?

Belady’s Anomaly occurs in some page replacement algorithms, where increasing the number of page frames (memory) results in an increase in page faults, which is counterintuitive.

Example for Interview:

- “Belady’s Anomaly is the paradoxical situation where adding more memory can worsen performance in certain algorithms, like FIFO.”

26. Why Do Stack-Based Algorithms Not Suffer From Belady’s Anomaly?

Stack-based page replacement algorithms (like LRU) don’t suffer from Belady’s Anomaly because they replace the least recently used page, rather than the oldest page, ensuring better decision-making as memory fills.

Example for Interview:

- “Stack-based algorithms are less prone to Belady’s Anomaly because they always select the page that’s least likely to be used next.”

27. How Can Belady’s Anomaly Be Eliminated?

Belady’s Anomaly can be avoided by using page replacement algorithms like LRU or Optimal, which tend to make more informed decisions about which page to replace, thus avoiding performance degradation as memory increases.

Example for Interview:

- “Using more intelligent algorithms like LRU or Optimal can avoid Belady’s Anomaly by considering recent memory access patterns.”

28. What Happens if a Non-Recursive Mutex Is Locked More Than Once?

If a non-recursive mutex is locked more than once by the same thread, it will result in a deadlock or blocking behavior, as the mutex is not designed to allow the same thread to acquire it multiple times.

Example for Interview:

- “A non-recursive mutex can cause a deadlock if locked multiple times by the same thread because it doesn’t allow re-entry.”

29. What Is the SCAN Algorithm?

The SCAN Algorithm is a disk scheduling algorithm that moves the disk arm in one direction to fulfill requests, then reverses direction when the end of the queue is reached. It is also known as the elevator algorithm.

Example for Interview:

- “The SCAN algorithm works like an elevator moving up and down, serving requests in one direction before reversing and serving in the other direction.”

30. What Is “Locality of Reference”?

Locality of reference refers to the tendency of a program to access a small set of memory locations repeatedly within a short time. There are two types:

- Temporal Locality: Recently accessed data is likely to be accessed again soon.

- Spatial Locality: Data near recently accessed memory is likely to be accessed soon.

Example for Interview:

- “Programs tend to access a limited number of memory locations in a short period, a phenomenon that improves the efficiency of caching.”

How to Prepare for Operating Systems Interview Questions: A Detailed Guide

Preparing for operating systems (OS) interview questions requires both theoretical knowledge and practical understanding of how modern operating systems work. operating system (OS) is a fundamental topic for many tech interviews, especially for roles in systems programming, software development, and IT infrastructure. Here’s a detailed guide on how to prepare:

1. Be Prepared for Problem-Solving and Scenario-Based Questions

In addition to theoretical knowledge, interviewers may ask you to solve real-world operating systemOS problems. Practice solving these kinds of problems:

- Memory Allocation: How would you implement a memory allocation system that avoids fragmentation?

- CPU Scheduling: Given a set of processes with different burst times, use a scheduling algorithm to determine the execution order.

- Deadlock Handling: Given a scenario with multiple processes and resources, identify potential deadlocks and propose strategies to prevent them.

Tip: You might be asked to write code to simulate or solve problems related to memory management, scheduling, or file systems.

2. Review Operating System Design and Architecture

Some interviewers may ask you to explain or design an operating system component. For example:

- Design a simple file system: Understand how to create a basic file system with operations like open, read, write, and close.

- Design a memory manager: Explain how you would manage the allocation and deallocation of memory, handle fragmentation, and support paging.

3. Brush Up on Practical Skills

Having hands-on experience with operating systems will give you a deeper understanding. Here’s how to enhance your practical skills:

- Work with Unix/Linux: Many operating systemOS concepts are easier to understand when you work with a Unix-based operating system. Learn to use commands, shell scripting, and explore the internal workings of the operating system (e.g., process management, file system, and memory management).

- Practice System Programming: Write programs that deal directly with operating systemOS functions like memory management, process scheduling, and I/O operations.

- Work on Projects: Consider working on open-source projects or building your own operating system simulation to practice your understanding of OS concepts.

4. Prepare for Behavioral Questions Related to Operating System

According to a LinkedIn report, behavioral questions are considered effective by 75% of hiring managers for evaluating a candidate’s potential performance. While the focus is often on technical knowledge, interviewers may ask behavioral questions that assess your problem-solving approach. Some examples are:

- Tell me about a time when you encountered a challenging system performance issue. How did you identify the root cause, and what steps did you take to resolve it?

- Describe a situation where you had to collaborate with a team to troubleshoot and fix a critical bug in an operating system. How did you approach the problem and communicate with your team to ensure a timely solution?

- Give an example of a project where you had to optimize resource management in an operating system. What strategies did you use to improve efficiency, and how did you measure the success of your improvements?

Be prepared to explain how you approach problems, collaborate with team members, and learn new operating system features.

5. Stay Up-to-Date with New Technologies

Operating systems are evolving with new technologies like virtualization, cloud computing, containerization (e.g., Docker), and microservices. In HR round it can be be asked so, Understanding how these technologies interact with operating system concepts is becoming increasingly important in interviews.

- Docker and Kubernetes: Understand containerization and how operating system-level virtualization works in modern systems.

- Cloud Operating Systems: Learn about cloud operating system concepts like distributed file systems and cloud resource management.

6. Mock Interviews and Practice

Finally, conduct mock interviews with peers or use platforms like LeetCode or HackerRankto practice your operating system knowledge. Focus on both solving problems and articulating your thought process clearly during the interview.

7. Review Common OS-Related Algorithms and Data Structures

Many operating systems are built upon efficient algorithms and data structures. Understanding these will help you answer interview questions related to performance optimization and resource management:

- Page Replacement Algorithms: Learn the differences between algorithms like FIFO (First-In-First-Out), LRU (Least Recently Used), and Optimal Page Replacement. Understand their trade-offs in terms of memory and processing efficiency.

- Scheduling Algorithms: Know the details of CPU scheduling algorithms (e.g., Round Robin, Shortest Job First, Priority Scheduling) and how they affect system performance.

- Locking Mechanisms: Understand how operating systems handle concurrency and synchronization using techniques like spinlocks, semaphores, and mutexes.

8. Focus on Multithreading and Multiprocessing Concepts

Interviews often focus on concepts related to multithreading and multiprocessing as they are critical to modern operating system:

- Multithreading: Understand the differences between user-level and kernel-level threads, the advantages of multithreading, and the challenges such as thread synchronization, deadlock, and race conditions.

- Multiprocessing: Learn about symmetric and asymmetric multiprocessing, the differences between multi-core and multi-processor systems, and how operating system manage tasks on these systems.

9. Understand Operating System Security Concepts

- Security and Privacy: Be familiar with the different types of security attacks an operating system may face (e.g., buffer overflow, privilege escalation) and how modern operating systems defend against them.

- User Authentication and Authorization: Understand concepts like authentication protocols, access control mechanisms (e.g., DAC, MAC), and role-based access control (RBAC).

- Secure OS Design: Learn how operating system (OS) designers implement security measures such as secure boot, encrypted file systems, and mandatory access control.

10. Practice Using Operating System Commands and Tools

Some operating system interviews, especially for positions related to systems administration or DevOps, may require you to demonstrate practical knowledge of operating system commands and tools. Practice using:

- Unix/Linux Commands: Familiarize yourself with basic commands for navigating file systems (ls, cd, rm), process management (ps, kill, top), file manipulation (cat, grep, chmod), and networking (ping, ifconfig).

- System Monitoring: Learn how to monitor system performance using tools like top, vmstat, iostat, and netstat to diagnose CPU, memory, and I/O bottlenecks.

11. Work on Real-World Case Studies

Having an understanding of how real-world operating systems work can help you answer case study-based questions during interviews. For instance:

- Linux Kernel: Study how the Linux kernel handles scheduling, memory management, and I/O.

- Windows OS: Learn how Windows handles process management, threading, and inter-process communication (IPC).

- Mobile OS (Android/iOS): Be aware of how mobile operating systems manage limited resources like memory, battery life, and CPU usage.

12. Think About Practical Examples

When explaining your answers in an interview, it’s often helpful to use real-life or practical examples. For instance:

- File Systems: Instead of just explaining how a file system works, use an example like how an ext4 file system works in Linux and how it handles file allocation.

- Scheduling: When explaining scheduling algorithms, provide a practical scenario where you would use Round Robin or Shortest Job First and why one might be preferred over the other in a given situation.

By mastering both theoretical knowledge and practical experience, focusing on modern technologies and real-world examples, you’ll be able to effectively answer operating system interview questions. Interview Preparation should not only involve studying concepts but also engaging in hands-on experience with system-level programming, working with operating system commands, and understanding how modern Operating System technologies are applied in today’s computing world.